AI Chronicles: Dialogues Beyond Time

A Multimodal Multicasting Production

Pilot Project Starring: Steve Jobs and Aristotle

Presented by Divine FreeMan

Steve Jobs and Aristotle come to life, powered by advanced multicasting technology. In this episode of AI Chronicles, watch as Steve Jobs interacts autonomously with Aristotle, bridging centuries of thought and innovation. Guided by human supervision, this simulation explores how artificial intelligence can recreate and connect the minds of the past with the pioneers of the modern era. Science, art, and culture converge into a harmonious cycle, offering unique insights into philosophy, creativity, and technology.

Steve Jobs' Vision in Aspen, 1983

In 1983, during the International Design Conference in Aspen, Steve Jobs envisioned a future where we could capture the worldview of a modern Aristotle and interact with it, asking questions and receiving profound insights. Inspired by this vision, we have developed a multicasting multimodal system that enables groundbreaking simulations.

This tool represents a new kind of interactive reality, combining advanced AI with dynamic, on-demand experiences. By harnessing the latest advances in artificial intelligenceOur platform creates a space where extended realities converge with human curiosity, unlocking the potential to engage with knowledge and perspectives in transformative ways. This is not just a simulation; it's a glimpse into a future where interactivity reshapes how we learn, create, and connect.

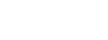

The Architecture of Multimodal Multicasting

Multicasting and AI Architecture

The multicasting was responsible for orchestrating the dialogue between the personas of Steve Jobs and Aristotle. This architecture creates adaptive and intelligent systems, ideal for simulation, training, or dynamic communication networks. It leverages AI-driven APIs to deliver enhanced performance and complexity while fostering effective collaboration among agents. Additionally, the use of WebSockets ensures real-time interaction and seamless communication throughout the system.

Integrating Multicasting with Photorealistic 3D Experiences: The Choice for Unreal

During the research, several platforms were studied to deliver a 3D experience with maximum transmedia portability. Unreal and Unity technologies were the preferred options for integration with multicasting. While both are powerful game engines for 3D and 2D development, they have differences in their strengths and use cases. Our main objective was to prove the concept and integrability with multicasting. Unreal was chosen for its ability to offer photorealistic featuressuch as MetaHumans, and to allow maximum creative flexibility.

The integration of Unreal Engine, Omniverse, and Audio2Face was essential to achieve precise and efficient lip sync during the simulation. The workflow involved the following steps:

- - Omniverse and Audio2Face

Audio2Face, an AI-powered tool by NVIDIA, was used to generate facial animations synced to audio input. It transforms audio features into facial movements, automatically creating expressions and lip synchronization in an asynchronous manner.

Omniverse acted as an intermediary platform, connecting Audio2Face to Unreal Engine, providing a collaborative environment and enabling real-time adjustments to the generated animations. - - Integration with Unreal Engine

After generating the animation data in Audio2Face, it was exported to Unreal Engine using the Omniverse Connector, a tool that facilitates data exchange between platforms. In Unreal EngineThe data was directly applied to 3D models (such as MetaHumans), leveraging the engine's high-quality rendering and support for photorealistic facial details. - - Synchronization and Adjustments

The integration allowed detailed adjustments to ensure that facial expressions and lip movements appeared natural and consistent with the cloned voices. While the main integration used asynchronous data, the potential for implementing real-time solutions was explored, further enhancing the system's interactivity.

This setup ensured that the lip sync was highly accurate and visually compelling, maintaining the quality and efficiency required for the project.

Voice Cloning: Creating Immersive Experiences with Sound Credibility

Several questions were raised about how to clone voices for the simulation. Today, there are numerous platforms that support API integration with neural multilanguage voicessuch as Google Speech and Azure. However, using voices that did not closely resemble Steve Jobs's would not sufficiently convince the audience. The goal was to enable the experience to suspend disbelief for a few minutes, allowing the exploration of something deeply captivating and credible.

Platforms such as:

- Parrot AI and ElevenLabs enable voice cloning with an impressive level of realism.

- Replicate vocal nuances to deliver highly engaging auditory experiences.

- Integrate into photorealistic 3D experiences in Unreal Engine, fostering learning and innovation.

These technologies were employed in the project with the educational goal of exploring immersive simulations, with no intention of infringing on copyrights, while enhancing authenticity.

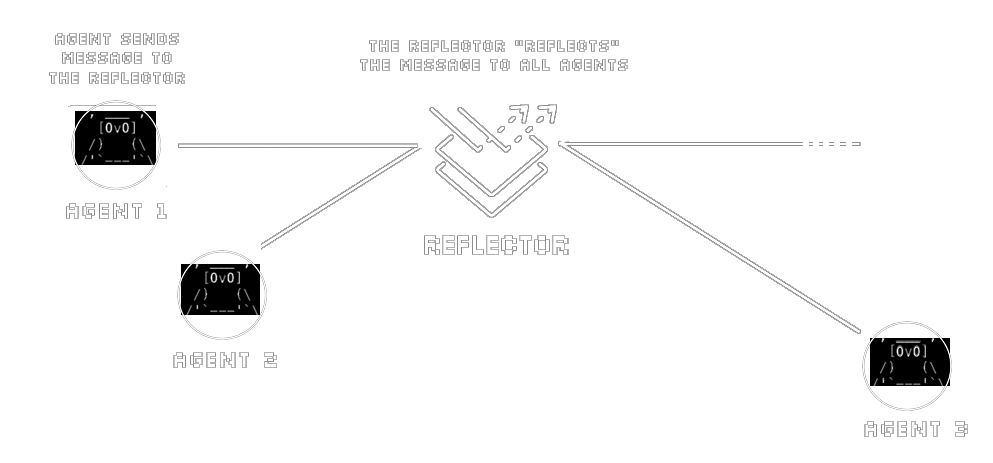

Creating 3D Models of Steve Jobs and Aristotle

The creation of the 3D models of Steve Jobs and Aristotle involved strategic decisions to capture the essence of each historical figure.

- Steve Jobs: The goal was to craft a model faithful to the image he holds in the collective imagination, emphasizing his iconic visual identity.

- Aristotle: The model was based on classical representations, such as statues and historical busts, ensuring a strong visual connection to ancient artistic traditions.

These approaches leveraged technologies like ChatAvatar and TurboSquid, ensuring precision and hyper-realistic qualityoptimized for integration into simulation and multicasting systems.

Preview: Style in the Final Product

A crucial phase of the project focused on pre-visualization and defining the artistic style for the final product.

- Tools like MidJourney were used to explore innovative visual concepts.

- Techniques involving 2D animation with AI enabled unique possibilities for characterization.

- The scene's ambiance was meticulously crafted to ensure full immersion.

- MidJourney: This tool was used to generate visual concepts and explore stylistic variations that balanced realism with artistic aesthetics. It helped create visual references for both the characters and the environments, ensuring a cohesive creative direction.

- 2D Animation with AI: AI models were employed to produce quick, stylized animations, allowing for visualization of character interactions in different settings. This approach also helped define textures, colors, and movements that could later be incorporated into the 3D animations.

The combination of these techniques enabled creative experimentation and provided a clear vision of the final product, aligning the aesthetics with the project's narrative and technological goals.

During the experiments, it was observed that certain methods could significantly enhance the level of photorealism in the scenes. However, such an approach would compromise the portability and transmedia nature of the project, which were considered essential for its success.

Storyboard development: Jobs-Aristotles simulation

Once the platform and dialogue framework-Unreal Engine connected to the multicasting system-were established, the storyboard became an essential component in transitioning the project from concept to production.

The storyboard provided a clear visual and narrative roadmap, ensuring that every scene aligned with the project's vision of bridging the philosophies of Steve Jobs and Aristotle. By mapping out:

- Camera angles,

- Shot sequences,

- Dialogue delivery.

It offered a structure that streamlined the creative process and facilitated technical planning.

Divine FreeMan: The Timeless Dating Presenter

Divine FreeMan is a photorealistic MetaHuman, created using the advanced technology of Unreal Engine. Designed as a sophisticated presenter, Divine FreeMan's role is to facilitate and mediate hypothetical, timeless encounters with a futuristic perspective.

Seamlessly integrated into Unreal's dynamic systems, Divine FreeMan offers:

- Adaptive communication;

- The ability to deliver complex narratives in an engaging and interactive way;

- A unique bridge between humanity and cutting-edge digital representations.

His presence enhances the immersive experience of projects like "Jobs-Aristotle"ensuring a captivating and thought-provoking interaction between history, philosophy, and modern advancements.

End Credits

The Multimodal Multicasting is an ongoing research project by Visgraf Lab in partnership with VFXRIO.

VFXROMA:

- Matteo Moriconi: Direction and VFX Supervision

- Emiliano Morciano: Animation and Light Design

VFXRIO:

- Luiz Velho: Production and Editing

- Cris Lyra: Storyboard and Camera

- Bernardo Alevato: Graphics and Visual Effects

This project represents an important milestone in exploring the integration of AI and cutting-edge technologies into transmedia storytelling. Moving forward, we will implement further advancements to refine the pipeline, ensuring it fully supports the dynamic needs of transmedia experiences.

While the use of AI was fundamental to achieving many aspects of this project, we recognize the areas where the human touch remains essential. The interplay of human creativity and AI-driven tools is key to maintaining authenticity, emotional depth, and a sense of artistry that cannot yet be replicated solely by machines. This balance will continue to guide us as we push the boundaries of storytelling and immersive experiences.

Avant-première